How to

Data Quality, Simplified: Mesoica's Integration with Azure Data Factory/Synapse Pipelines

Introduction

Clean and accurate data are crucial for making effective decisions. Mesoica understands the vital role that data quality plays in modern businesses, as well as the challenges involved in managing data effectively. Data quality is often approached from a technical perspective, we believe the business should be in the driver’s seat.

In our "how-to" series, we demonstrate how you can integrate your data platform with Mesoica for a wide range of use cases. In this installment, we will focus on adding data quality checks to existing Azure Data Factory pipelines. We will create an end-user-friendly, early warning system that ensures data quality from source to reports and dashboards. This integration empowers businesses users to proactively address data quality issues, avoiding more problems downstream and enabling informed decisions.

Let's get started and dive right in.

What is Azure Data Factory?

Azure Data Factory is a cloud-based data integration service offered by Microsoft. It serves as a fundamental component in the Azure ecosystem, designed to empower organizations to manage and transform data seamlessly. At its core, Azure Data Factory allows businesses to create, schedule, and orchestrate data workflows, facilitating the movement of data from a variety of sources to multiple destinations. With support for diverse data sources, including files, databases, and cloud services, it provides a flexible and efficient platform for data extraction, transformation, and loading (ETL) processes.

Azure Data Factory data pipelines can also encompass a range of data processing activities. These pipelines can execute tasks such as data movement, transformation, and dataflow control, allowing users to shape and manipulate data according to their unique requirements.

What is Mesoica? Introducing the Mesoica data quality platform

Now that you're familiar with Azure Data Factory, let's introduce another key player in this story: the Mesoica data quality platform.

Mesoica is designed to streamline your data quality processes by continuously monitoring datasets in your data landscape. This proactive approach allows you to identify and address issues before they morph into bigger problems.

At the heart of our platform is a mission to simplify and optimize data processes, helping you unlock the full potential of your data. With Mesoica, you can gain actionable insights, make informed decisions, and achieve data-driven success with confidence and ease.

In the next section, we'll show you how to connect Azure Data Factory to Mesoica, bringing the full power of automated data quality checks to your users!

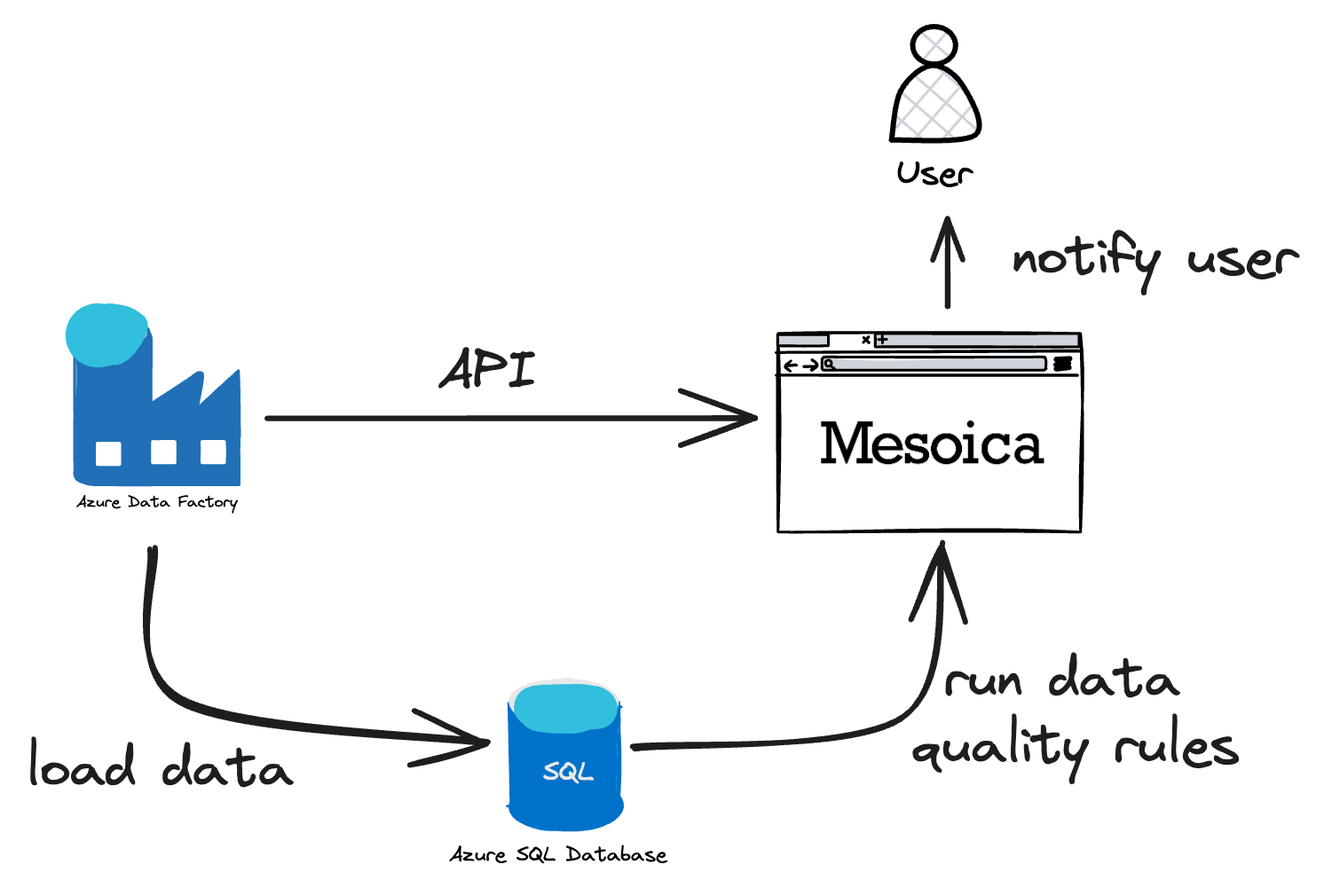

The integration

The integration is designed to seamlessly fit into the "traditional" ETL (Extract, Transform, Load) pipeline structure. In this process, data is initially extracted from diverse sources, including files, databases, or tables. Subsequently, it undergoes a series of operations, including combining, filtering, aggregating, and ultimately finding its place in a target table.

However, what sets this integration apart is focus on what happens post-transformation. Once the ETL pipeline completes, the integration automatically initiates a validation run on the freshly transformed or loaded data. This validation step serves a crucial role in ensuring that the data meets the predefined quality standards.

As a result, the Mesoica application springs into action, notifying the relevant end users while providing them with in-depth information about any identified data quality issues. This timely notification equips users with the knowledge required to address and rectify any potential data quality concerns, thus safeguarding the overall quality and integrity of the data.

Steps to integrate an existing pipeline to the Mesoica platform

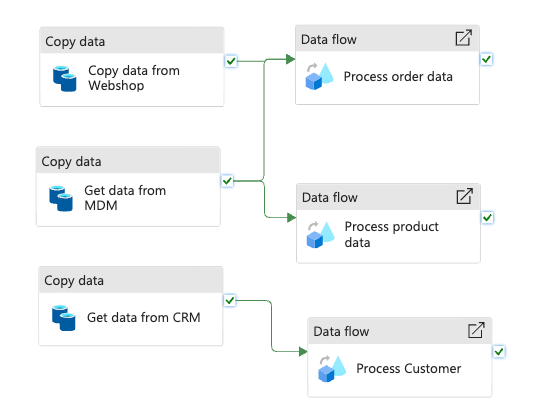

For this example, we will illustrate the integration using an existing sample data pipeline. This pipeline extracts data from three distinct source systems:

- The webshop, contains data on orders

- The master data system, houses product information

- The CRM system, holds customer data

These data feeds are then loaded into target tables, designed to cater to a series of reporting requirements and to provide data to various BI dashboards and analytics tools. It's important to note that there are various approaches to structuring workflows of this nature, and the one presented here is just one among many possibilities.

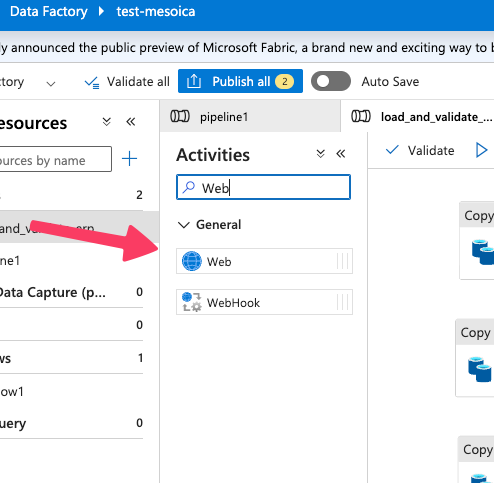

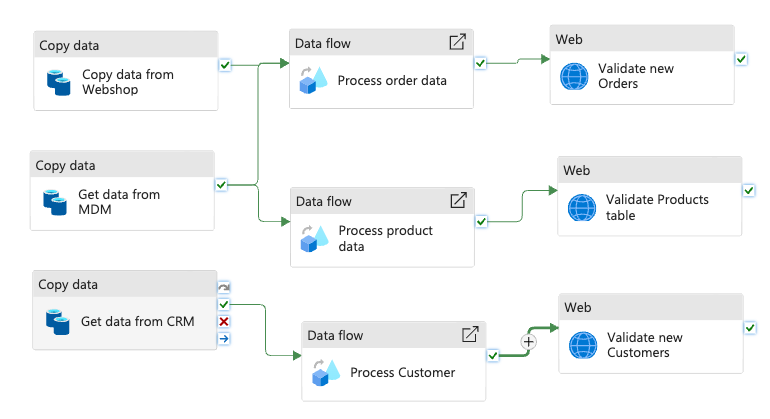

Upon the successful completion of the "Data flow" activities depicted in the flow chart, the data loading process is accomplished. At this point in the process, the next step is to initiate our predefined validation rules set up in Mesoica. This can be effortlessly achieved by executing a straightforward API call by using the ADF "Web activity".

To add this to your pipeline search for the Web activity and drag it onto the canvas. Make sure these are connected to the existing data flow activities. For a successful configuration of the Web activity, navigate to the Settings tab and follow these steps:

- URL: It's crucial to provide the URL of the API endpoint. Keep in mind that each client operates their independent instance of the Mesoica platform, so the URL is specific to your instance.

- Method: In this context, we perform a POST request to the API.

- Body: Prepare a JSON payload that includes an identifier and necessary configuration details. This payload is instrumental in guiding Mesoica to understand which set of rules to execute.

- Headers: Include a couple of headers to ensure smooth communication:

- Set the "content-type" header to "application/json."

- Configure the "Authorization" header with the format "Token <token supplied by Mesoica>."

When you are done, this is how it should look in the end:

Once everything is properly configured, you can proceed to validate and save your updated data pipeline. After saving, it's time to execute the pipeline. If any data quality issues are detected during this process, they will promptly surface on the Mesoica data quality dashboard, as illustrated below. This intuitive dashboard serves as your real-time window into the health of your data, ensuring that you're well-informed about any quality concerns as they arise.

![[Data quality dashboard]](/_next/static/media/mesoica-monitor.a9acbdba.svg)

Conclusion

That’s all that there is to it, you’re on your way to integrate data quality into all of your data pipelines in just a few simple clicks.

From the above you’ve seen how easy it is to integrate continuous data quality into new and existing data pipelines. The benefits of this integration are threefold, designed to simplify data quality management and make it accessible to a broader audience:

Quick Exposure of Data Quality to Non-Technical Users:

The integration brings data quality into the spotlight for non-technical users. In this traditional ETL pipeline setting, data quality is often viewed as a technical matter. Still, with this integration, it becomes visible and understandable to business users and data stakeholders. The data's reliability and integrity are easily discernible, offering confidence in data-driven decisions.

Non-Technical Rule Definition:

In the past, ensuring data quality required technical expertise, often involving SQL or other complex development languages. However, Mesoica changes the game. Data quality rules can be defined in a non-technical manner, allowing business users and data stakeholders to set and manage rules without needing technical skills. This simplifies the process and extends data quality management to a wider audience within your organization.

Simplicity of Integration:

Integrating Azure Data Factory with Mesoica DQ is remarkably straightforward. It merely involves a simple API call. This minimal impact on existing pipelines ensures that your current data operations can continue unaffected while benefiting from enhanced data quality and validation.

If this looks interesting to you, it's time to take action. Book a demo so we can show you how to unlock the full potential of your data and seamlessly incorporate Mesoica DQ into your new or existing data pipelines. and start experiencing the immediate benefits. Whether you're in charge of data management or an end user seeking reliable data, this integration has the power to transform the way you work with data. Get started today, and embark on a journey towards data quality, simplicity, and informed decision-making.

Mesoica’s data quality platform is specifically designed to help LPs and GPs manage their data efficiently. By using our platform, you can seamlessly collect, validate, and monitor data, enhancing communication and collaboration. Our scalable solution adapts to your organization's growing data needs, providing peace of mind and enabling you to become a truly data-driven organization. Start your journey today by visiting our website or contacting us to learn more about how Mesoica can empower your firm to continuously improve data quality.